The EU AI Act: A New Era in Artificial Intelligence Regulation

As artificial intelligence (AI) technology continues to advance at an unprecedented pace, the European Union has taken a proactive step to ensure safety, transparency, and accountability in AI applications. The EU AI Act, proposed in April 2021, aims to regulate AI technologies across Europe, making it a crucial topic for developers, businesses, and policymakers alike. Understanding its implications is essential for anyone involved in the tech industry or those interested in the ethical dimensions of AI.

Understanding the EU AI Act

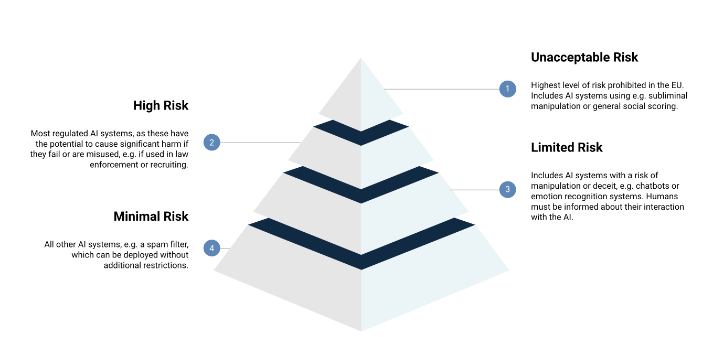

The EU AI Act seeks to establish a comprehensive framework that categorizes AI systems based on the level of risk they pose to individuals and society. It divides AI applications into four main categories: unacceptable risk, high risk, limited risk, and minimal risk. Unacceptable risk AI systems, such as social scoring by governments, will be banned entirely. High-risk systems, which include applications in critical sectors like healthcare and transportation, will require strict compliance with regulatory standards. This approach ensures that AI technologies are developed and deployed responsibly, fostering public trust in the digital ecosystem.

Key Provisions and Compliance Requirements

A significant aspect of the EU AI Act is its focus on transparency and accountability. High-risk AI systems must adhere to stringent compliance requirements, including rigorous testing, detailed documentation, and continuous monitoring. Additionally, developers are required to perform impact assessments, which evaluate the potential consequences of their AI systems. Transparency measures include clear labeling and user information to help individuals understand how AI technologies interact with their data. Businesses and organizations operating in the EU must prioritize compliance, as failure to do so could lead to hefty fines and reputational damage.

Implications for Businesses and Innovators

The EU AI Act presents both challenges and opportunities for businesses and innovators. While compliance may involve significant investments and adjustments to existing practices, it also provides an opportunity to differentiate products in an increasingly conscientious market. By prioritizing ethical AI development, companies can position themselves as leaders in responsible technology, fostering customer loyalty and trust. Moreover, the regulations may inspire innovation, encouraging the development of safer and more efficient AI solutions that align with societal values.

In conclusion, the EU AI Act marks a pivotal moment in the regulation of artificial intelligence, emphasizing the need for safety, transparency, and ethical responsibility. Whether you’re a developer, a business owner, or simply an AI enthusiast, staying informed about these regulations is essential. For those interested in the future of technology, diving deeper into the implications of the EU AI Act could be a rewarding endeavor. Explore the details, engage in discussions, and be part of shaping a responsible AI landscape.